Enhancing Transparency in Generative AI: Strategies for Ethical and Accountable Deployment

Generative AI (GenAI) has swiftly moved from research labs into mainstream industry applications, powering everything from automated content creation to decision support systems. A 2023 global survey by McKinsey found that one-third of companies were already using GenAI in at least one business function [1], and the market for generative AI software and services surged to over $25 billion by 2024 [2]. This rapid adoption underscores GenAI’s growing role across sectors – but it also shines a spotlight on a critical need: transparency. As organizations leverage complex AI models, questions emerge about how these models make decisions, what data they use, and whether hidden biases or errors lurk within their outputs. Transparent AI systems are essential to address ethical concerns (like biased or misleading content), comply with evolving regulations, and maintain trust with users and stakeholders.

Transparency in GenAI means openly communicating how AI models work – their design, training data, decision criteria, and limitations. This article explores key themes and best practices for achieving such openness: from explainability techniques that make “black-box” AI decisions understandable, to documentation standards and compliance audits that keep AI use in check, to emerging industry frameworks that can guide ethical and accountable deployment. By proactively enhancing transparency, organizations can ensure their GenAI innovations remain ethical, compliant, and worthy of users’ trust.

Why Transparency in GenAI Matters

Ethical Implications: Opaque GenAI can produce harmful biased outcomes without explanation - like recruitment algorithms discriminating against women [3]. Who's accountable when AI hallucinates or amplifies biases? Transparency enables accountability and ethical deployment by exposing biases to scrutiny, helping identify responsibility when systems fail, and ensuring fair operation [8].

Regulatory Landscape: Global regulations increasingly mandate AI transparency. The EU's AI Act requires transparency for high-risk systems, including labeling AI-generated content [5]. The US Blueprint for an AI Bill of Rights emphasizes "notice and explanation" rights [6]. While the regulatory environment evolves, the trend is clear: inadequate transparency risks legal non-compliance [7].

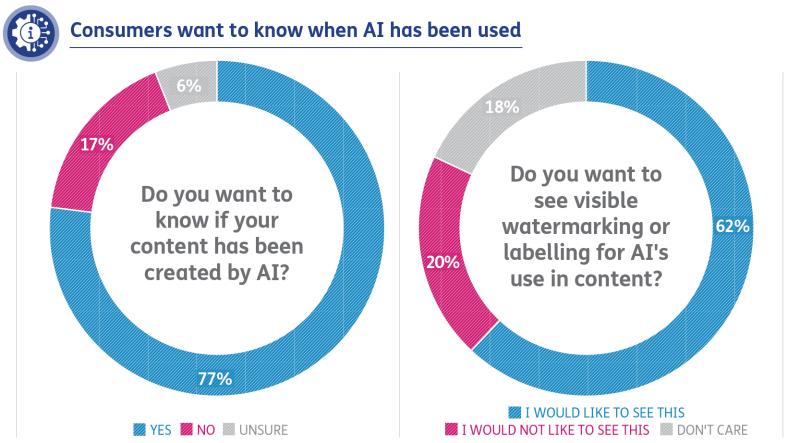

Business and Consumer Trust: Transparency directly affects public trust in AI deployment. 77% of consumers want to know when content is AI-generated [3], and 63% want companies to disclose their AI tools. Hidden AI breeds mistrust and resistance, while transparency builds confidence. Companies unable to explain harmful AI outcomes face severe backlash, making transparency fundamental to market success [4].

Figure 1: Consumers want to know when AI is used. Left: 77% of surveyed consumers want to be notified if content they encounter was generated by AI, versus only 17% who do not (6% unsure). Right: 62% would like AI-generated content to be clearly labeled or watermarked, far outnumbering those opposed. Image courtesy – baringa.com.

Key Strategies for Transparency

Achieving transparency in generative AI is a multifaceted challenge. It requires technical tools, process changes, and cultural shifts within organizations. Below are key strategies – spanning explainable AI methods, documentation practices, audits, training, and open collaboration – that companies can deploy to make their GenAI systems more transparent and accountable.

Implement Explainable AI (XAI) Techniques

One of the most direct ways to enhance transparency is to make the AI’s decisions explainable. Explainable AI (XAI) techniques are designed to shed light on how complex models arrive at their outputs. For example, SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-Agnostic Explanations) are popular tools that attribute an AI’s prediction to individual input features, helping users understand which factors were most influential. SHAP uses game-theoretic Shapley values to consistently measure feature importance for a given result, while LIME learns a simple local model around a specific instance to explain that instance’s prediction. Counterfactual explanations offer another approach: they tell us what could be changed in an input to get a different outcome, highlighting the key drivers of the original decision [9]. (For instance, a counterfactual explanation might say: “If income were $5,000 higher, the loan application would be approved,” indicating income was a decisive factor.) By employing such XAI methods, organizations can turn an AI system’s opaque logic into human-readable insights – whether through visualization dashboards, natural language explanations, or interactive tools. This not only helps engineers and auditors vet the model’s behavior, but also allows end-users to gain confidence in AI outputs because they can see why the AI made a given recommendation or content generation. Explainability is especially crucial for high-stakes applications (like healthcare or finance) where users demand justification for automated decisions.

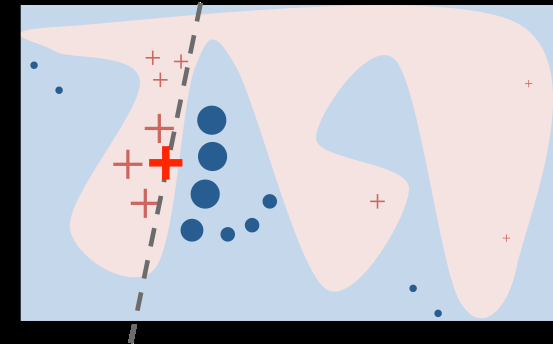

Figure 2: Explainability technique (LIME) in action. The complex “black-box” model’s decision boundary (pink and blue regions) is locally approximated around a specific instance (the red cross) by a simple linear model (dashed line). The method generates perturbed samples around the instance (blue dots, weighted by proximity) and fits a surrogate model that mimics the AI’s behavior in that neighborhood. This yields an interpretable explanation for why the AI classified the red-cross instance a certain way, demonstrating how XAI can provide insight into GenAI decisions without fully revealing the global model. Image courtesy – “"Why Should I Trust You?": Explaining the Predictions of Any Classifier” [15]

To implement XAI in practice, teams should integrate these tools into their AI development workflow. This could mean generating feature-attribution plots for model predictions using SHAP, providing LIME-based explanation reports for individual AI-generated outputs, or supplying users with an interactive slider to explore counterfactual scenarios. Additionally, selecting inherently interpretable models (when accuracy trade-offs permit) is another tactic – for example, using a transparent rules-based system or smaller decision tree in place of an inscrutable deep neural network for certain tasks. The goal is to ensure that for every GenAI output, there is a corresponding explanation that a knowledgeable person can audit and a layperson can at least partially understand. Organizations like DARPA and IEEE have been advancing XAI research, and many open-source libraries now make it easier to plug explainability into AI systems. By adopting XAI, companies demonstrate a commitment to “showing their work” – making the hidden workings of generative models visible and hence accountable.

Comprehensive AI Model Documentation

Transparency requires documentation alongside technology. Leading AI organizations have introduced frameworks like Model Cards (Google)[10] and Transparency Notes (Microsoft) that function as AI "nutrition labels" detailing intended use, performance metrics, biases, data provenance, limitations, and maintenance history [11]. These documents help stakeholders understand AI contexts and prevent misuse.

Organizations should publish documentation specifying model architecture, training data sources, bias testing outcomes, and usage guidelines. Internal teams may maintain detailed "system cards" mapping model interactions. Industry increasingly adopts "datasheets for datasets" to document training data collection and preprocessing. Coalitions like Partnership on AI's ABOUT ML initiative are standardizing documentation practices. Comprehensive documentation facilitates regulatory compliance and builds trust. Some companies like IBM already voluntarily disclose training data sources, demonstrating that transparency can coexist with intellectual property protection.

AI Auditing and Compliance

Having explainability and documentation creates the foundation for regular AI audits and governance checks. Similar to financial auditing, AI auditing examines algorithm behavior against ethical and legal standards, testing for bias, robustness, security, and compliance [12]. Organizations should establish governance frameworks with periodic assessments by cross-functional teams to ensure AI systems operate as intended without drifting into problematic behaviors.

Why is this important? Because "with great AI comes great accountability." Without oversight, AI systems risk creating bias, legal exposure, or reputational damage. Proper governance includes pre-deployment reviews, continuous monitoring, and post-incident audits. Many organizations now form AI ethics committees while following frameworks like NIST AI Risk Management or ISO standards. Emerging automated auditing tools can scan for fairness metrics and generate transparency scores. Regular auditing transforms transparency from a one-time effort into an ongoing practice, ensuring GenAI remains accountable throughout its lifecycle.

Ethical AI Training and Culture

Transparency requires training people, not just implementing technology and processes. An overlooked strategy for ethical AI deployment is educating the workforce and leadership in responsible practices. Everyone involved with GenAI should understand transparency principles. Teams need education on algorithmic bias, fairness, privacy, and social impacts so they integrate transparency from the start. Engineers trained to recognize bias will proactively test models on diverse inputs and report performance disparities.

Organizations should develop formal ethical AI training programs covering AI failure case studies, XAI tool tutorials, and stakeholder communication guidelines. Leadership must champion transparency to set the company tone. Without proper training, staff may misuse AI or miss bias and privacy risks [13]. A workforce educated in ethical AI becomes the first defense for transparency. Companies should cultivate cultures that reward raising concerns through clear channels, including anonymous reporting mechanisms. Empowering people with knowledge and a transparency-first mindset complements technical measures, making AI deployments more ethical and accountable.

Open Models and Industry Collaboration

Transparency can be enhanced by adopting a more open AI development approach. While companies traditionally guard AI models as proprietary, excessive secrecy undermines accountability. Forward-thinking organizations share parts of their AI systems through open-sourcing, research publication, or industry initiatives. Open-sourcing GenAI models allows external experts to inspect behavior and provide valuable feedback. Recently, Meta released language models to researchers, while open-source projects like Stable Diffusion enabled independent audits. Companies unable to fully open-source might still share pre-trained versions or anonymized datasets.

Companies can balance transparency with IP by publishing technical details without revealing proprietary elements. They can release papers describing model architecture, training approaches, and fairness metrics without sharing production code. Participating in multi-stakeholder forums advances industry-level transparency. Initiatives like Stanford's Foundation Model Transparency Index benchmark disclosure efforts [14]. Engaging with these external efforts helps organizations anticipate regulatory demands and shape transparency norms. Openness builds a wider trust ecosystem and promotes accountable AI. Companies contributing to open AI resources demonstrate ethical leadership, enhancing their reputation and fostering innovation through shared learning.

Challenges and Roadblocks

Implementing the above transparency strategies is not without challenges. Organizations often encounter several hurdles on the path to a more open and explainable AI deployment:

- Balancing Transparency with IP and Security: Companies fear revealing too much about AI models could compromise intellectual property or competitive advantage. Proprietary algorithms and datasets are valuable assets, creating internal resistance to transparency - legal teams may oppose publishing model information while executives worry about setting precedents competitors won't follow. Security concerns exist too: detailed disclosures might expose exploitable vulnerabilities. Organizations must balance building trust through transparency without compromising core IP or enabling misuse [7]. Industry-wide guidelines could help determine which aspects require disclosure versus confidentiality. Fortunately, transparency doesn't demand exposing source code or trade secrets - higher-level explanations, dataset information, and ethical considerations often satisfy stakeholders while protecting proprietary elements.

- Technical Complexity of AI Models: Modern GenAI models, especially deep neural networks with billions of parameters, are notoriously complex. Even experts struggle to understand why models produce specific outputs. Generating true explainability requires specialized algorithms, significant computing resources, or simplifying assumptions that may reduce fidelity. Communicating technical explanations to non-technical audiences without oversimplification presents another challenge. Smaller organizations without research teams may lack capabilities to implement XAI tools or comprehensive documentation. Some AI behaviors simply defy simple explanation - explaining "why" in black-box models remains difficult even for experts [8]. AI interpretability research continues, but companies must make case-by-case decisions, perhaps using simpler models where possible or partnering with experts for complex ones. Perfect transparency may not be immediately achievable for every system.

- Evolving Regulatory and Compliance Uncertainty: The AI regulatory landscape is rapidly evolving with global divergence in approaches, creating uncertainty about disclosure requirements. The EU AI Act remains in process and could change; US guidelines are currently voluntary but may become law. New proposals like California's AI-generated content disclosure law continue emerging. "Adequate transparency" goalposts shift, and compliance with one jurisdiction may not satisfy another. Organizations risk doing too little (legal exposure) or too much (oversharing). Non-standard, ad-hoc AI disclosures have limited utility, underscoring the need for uniform transparency frameworks. Companies must stay agile by tracking regulatory developments, potentially favoring greater transparency for safety, and engaging with policymakers through industry groups to address concerns about impractical mandates. This regulatory uncertainty will eventually ease as frameworks mature and converge, but navigating the current patchwork of rules remains challenging for global GenAI deployments.

Conclusion

Transparency is the bedrock of ethical AI adoption. How an AI reaches its output can be as crucial as the output itself. Explainability techniques, documentation, audits, training, and open collaboration all serve to transform AI systems from inscrutable wizards into accountable partners. Despite challenges like competitive pressures, technical limitations, and regulatory uncertainty, the trend toward transparency is clear. Organizations must be proactive: implement transparency measures now, even incrementally. Develop model cards, run bias tests, label AI-generated content, educate teams, and engage with industry groups. The benefits are substantial - reducing ethical risks, preparing for compliance requirements, and building trust. Companies embracing transparency will gain competitive advantage as responsible technology stewards.

GenAI's potential will only be realized if these systems are trusted. Transparent, accountable AI deployment ensures GenAI remains beneficial - augmenting human capabilities while upholding societal values. Enhancing transparency isn't merely about compliance; it's about creating a future where innovation and ethics coexist, where openness builds lasting confidence in the technologies shaping our world.

References:

[1] The state of AI in 2023: Generative AI’s breakout year. McKinsey (2023).https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai-in-2023-generative-ais-breakout-year

[2] Joaquin Fernandez. The leading generative AI companies. IoT Analytics (Mar 4, 2025). https://iot-analytics.com/leading-generative-ai-companies/

[3] Trust: transparency earns trust, and right now there isn’t enough of either. Baringa (Feb 25, 2025).https://www.baringa.com/en/insights/balancing-human-tech-ai/trust

[4] Boston Consulting Group & MIT Sloan Management Review. Artificial Intelligence Disclosures Are Key to Customer Trust. MIT Sloan (2023).https://sloanreview.mit.edu/article/artificial-intelligence-disclosures-are-key-to-customer-trust/

[5] Regulatory framework proposal on AI (AI Act). European Commission, Shaping Europe’s Digital Future (2024).https://digital-strategy.ec.europa.eu/en/policies/regulatory-framework-ai

[6] The White House OSTP. Blueprint for an AI Bill of Rights (Oct 2022), “Notice and Explanation” principle.https://bidenwhitehouse.archives.gov/ostp/ai-bill-of-rights

[7] AI Transparency: Building Trust in AI. Mailchimp (2023).https://mailchimp.com/resources/ai-transparency

[8] AI Transparency 101: Communicating AI Decisions and Processes to Stakeholders. Zendata (2023).https://www.zendata.dev/post/ai-transparency-101

[9] Sandra Wachter, Brent Mittelstadt, and Chris Russell (2017). “Counterfactual Explanations without Opening the Black Box: Automated Decisions and the GDPR.” Harvard Journal of Law & Technology, 31(2): 841-887.

[10] Margaret Mitchell et al. (2019). “Model Cards for Model Reporting.” Proceedings of FAT ’19 (ACM Conference on Fairness, Accountability, and Transparency) https://vetiver.posit.co/learn-more/model-card.html

[11] National Telecommunications and Information Administration (NTIA). AI System Disclosures – NTIA Accountability Policy Report (March 2024) https://www.ntia.gov/issues/artificial-intelligence/ai-accountability-policy-report/developing-accountability-inputs-a-deeper-dive/information-flow/ai-system-disclosures

[12] AuditBoard. 5 AI Auditing Frameworks to Encourage Accountability. AuditBoard Blog (Oct 17, 2024) https://www.auditboard.com/blog/ai-auditing-frameworks/

[13] Salehpour Law. AI Training for Employees: Why It’s Essential for Your Business. (2023) https://www.salehpourlaw.com/single-post/ai-training-for-employees-why-it-s-essential-for-your-business

[14] Stanford, “The Foundation Model Transparency Index”. https://crfm.stanford.edu/fmti/May-2024/index.html

[15] Ribeiro, Marco Tulio, Sameer Singh, and Carlos Guestrin. "" Why should i trust you?" Explaining the predictions of any classifier." In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, pp. 1135-1144. 2016.

About the author

Srinivas Reddy Bandarapu serves as a Principal Cloud Architect at DigiTech Labs, possessing over 22 years of expertise in IT, specializing in enterprise design, cloud systems, and transformation strategy. Working closely with companies in the healthcare, financial services, technology startup, and public sector sectors, he has committed the past decade to research, development, and invention in artificial intelligence, machine learning, and generative AI. He has collaborated with organizations from major enterprises to mid-sized startups on issues pertaining to distributed computing and artificial intelligence. He specializes in deep learning, encompassing natural language processing and computer vision, and assists nascent generative AI firms in developing novel solutions utilizing Azure services and enhanced computing resources. He is passionate about creating accessible resources for people to learn and develop proficiency with AI. Srinivas Reddy holds a Master’s Degree in Computer Science and is a Senior IEEE member. He is also affiliated with the Singapore Computer Society (SCS), the Institution of Electronics and Telecommunication Engineers (IETE) in India, ITServ in the USA, and the International Association of Engineers (IAENG). Connect with Srinivas Reddy Bandarapu on LinkedIn.

At The Edge Review, we believe that groundbreaking ideas deserve a global platform. Through our multidisciplinary trade publication and journal, our mission is to amplify the voices of exceptional professionals and researchers, creating pathways for recognition and impact in an increasingly connected world.

Member%20--%3e%3c!DOCTYPE%20svg%20PUBLIC%20'-//W3C//DTD%20SVG%201.1//EN'%20'http://www.w3.org/Graphics/SVG/1.1/DTD/svg11.dtd'%3e%3csvg%20version='1.1'%20id='Layer_1'%20xmlns='http://www.w3.org/2000/svg'%20xmlns:xlink='http://www.w3.org/1999/xlink'%20x='0px'%20y='0px'%20viewBox='0%200%20200%2067.9'%20style='enable-background:new%200%200%20200%2067.9;'%20xml:space='preserve'%3e%3cstyle%20type='text/css'%3e%20.st0{fill:%234F5858;}%20.st1{fill:%233EB1C8;}%20.st2{fill:%23D8D2C4;}%20.st3{fill:%23FFC72C;}%20.st4{fill:%23EF3340;}%20%3c/style%3e%3cg%3e%3cg%3e%3cg%3e%3cg%3e%3cpath%20class='st0'%20d='M76.1,37.5c-0.4-2.6-2.9-4.6-5.8-4.6c-5.2,0-7.2,4.4-7.2,9.1c0,4.4,2,8.9,7.2,8.9c3.6,0,5.6-2.4,6-5.9H82%20c-0.6,6.6-5.1,10.8-11.6,10.8c-8.2,0-13-6.1-13-13.7c0-7.9,4.8-14,13-14c5.8,0,10.7,3.4,11.4,9.5H76.1z'/%3e%3cpath%20class='st0'%20d='M84,35.9h5v3.6h0.1c1-2.4,3.6-4.1,6.1-4.1c0.4,0,0.8,0.1,1.1,0.2v4.9c-0.5-0.1-1.3-0.2-1.9-0.2%20c-3.9,0-5.2,2.8-5.2,6.1v8.6H84V35.9z'/%3e%3cpath%20class='st0'%20d='M106.4,35.4c6,0,9.9,4,9.9,10.1c0,6.1-3.9,10.1-9.9,10.1c-6,0-9.9-4-9.9-10.1%20C96.5,39.4,100.4,35.4,106.4,35.4z%20M106.4,51.6c3.6,0,4.7-3.1,4.7-6.1c0-3.1-1.1-6.1-4.7-6.1c-3.6,0-4.6,3.1-4.6,6.1%20C101.8,48.6,102.8,51.6,106.4,51.6z'/%3e%3cpath%20class='st0'%20d='M122.4,48.9c0,2.3,2,3.2,4,3.2c1.5,0,3.4-0.6,3.4-2.4c0-1.6-2.2-2.1-6-3c-3-0.7-6.1-1.7-6.1-5.1%20c0-4.9,4.2-6.1,8.3-6.1c4.2,0,8,1.4,8.4,6.1h-5c-0.1-2-1.7-2.6-3.6-2.6c-1.2,0-2.9,0.2-2.9,1.8c0,1.9,3,2.1,6,2.9%20c3.1,0.7,6.1,1.8,6.1,5.4c0,5-4.4,6.7-8.7,6.7c-4.4,0-8.8-1.7-9-6.7H122.4z'/%3e%3cpath%20class='st0'%20d='M141.6,48.9c0,2.3,2,3.2,4,3.2c1.5,0,3.4-0.6,3.4-2.4c0-1.6-2.2-2.1-6-3c-3-0.7-6.1-1.7-6.1-5.1%20c0-4.9,4.2-6.1,8.3-6.1c4.2,0,8,1.4,8.4,6.1h-5c-0.1-2-1.7-2.6-3.6-2.6c-1.2,0-2.9,0.2-2.9,1.8c0,1.9,3,2.1,6,2.9%20c3.1,0.7,6.1,1.8,6.1,5.4c0,5-4.4,6.7-8.7,6.7c-4.4,0-8.8-1.7-9-6.7H141.6z'/%3e%3cpath%20class='st0'%20d='M156.1,35.9h5v3.6h0.1c1-2.4,3.6-4.1,6.1-4.1c0.4,0,0.8,0.1,1.1,0.2v4.9c-0.5-0.1-1.3-0.2-1.9-0.2%20c-3.9,0-5.2,2.8-5.2,6.1v8.6h-5.3V35.9z'/%3e%3cpath%20class='st0'%20d='M174.2,46.8c0.1,3.3,1.8,4.9,4.7,4.9c2.1,0,3.8-1.3,4.1-2.5h4.6c-1.5,4.5-4.6,6.4-9,6.4%20c-6,0-9.8-4.1-9.8-10.1c0-5.7,4-10.1,9.8-10.1c6.5,0,9.7,5.5,9.3,11.4H174.2z%20M182.7,43.5c-0.5-2.7-1.6-4.1-4.2-4.1%20c-3.3,0-4.3,2.6-4.4,4.1H182.7z'/%3e%3cpath%20class='st0'%20d='M190.9,39.5h-3.1v-3.5h3.1v-1.5c0-3.4,2.1-5.8,6.4-5.8c0.9,0,1.9,0.1,2.8,0.1v3.9c-0.6-0.1-1.3-0.1-1.9-0.1%20c-1.4,0-2,0.6-2,2.2v1.1h3.6v3.5h-3.6v15.6h-5.3V39.5z'/%3e%3c/g%3e%3c/g%3e%3c/g%3e%3c/g%3e%3cpolygon%20class='st1'%20points='0,67.9%200,47.4%2016.8,41.9%2046.6,52.1%20'/%3e%3cpolygon%20class='st2'%20points='29.8,26.1%200,36.4%2016.8,41.9%2046.6,31.7%20'/%3e%3cpolygon%20class='st0'%20points='16.8,41.9%2046.6,31.7%2046.6,52.1%20'/%3e%3cpolygon%20class='st3'%20points='46.6,0.2%2046.6,20.6%2029.8,26.1%200,15.9%20'/%3e%3cpolygon%20class='st4'%20points='29.8,26.1%200,36.4%200,15.9%20'/%3e%3c/svg%3e)

Important Links

Contact Info

info@theedgereview.org

Address:

14781 Pomerado Rd #370, Poway, CA 92064