How Dynamic Data Governance enables instant insights for unprecedented innovation

In today’s data-driven industries, decisions often need to be made on the fly using up-to-the-second information. Real-time data governance refers to managing data availability, quality, integrity, and security continuously as data is created, rather than retrospectively in batch processes [1]. This approach has become essential as data volumes and velocities explode. IDC projects that by 2025 nearly a quarter of the world’s data will be generated in real time [2], fueled by roughly 150 billion connected devices streaming information. The surging demand for instant insights makes it critical to govern streaming data effectively. Companies must ensure that fast-moving information remains accurate, secure, and compliant—failure to do so can lead to costly mistakes or regulatory penalties. Real-time data governance has thus become a strategic imperative for enabling trustworthy, data-driven decision-making at speed.

The Role of Real-Time Data Governance in Key Sectors

Real-time data governance is rapidly redefining operational excellence across diverse industry sectors by ensuring that data flows remain accurate, secure, and compliant as they are generated. In sectors where every second counts—whether for detecting financial fraud, delivering timely patient care, or optimizing public services—streaming data must be continuously validated and monitored against evolving compliance standards. As these industries increasingly rely on instantaneous insights for strategic decision-making, real-time data governance frameworks are emerging as the backbone for not only protecting data integrity but also enabling transformative innovation and competitive advantage.

Finance

In finance, where milliseconds count, streaming data for trading, fraud detection, and risk management must be governed in real time to avoid costly errors. Real-time data governance ensures trade feeds, transaction records, and market data are correct and auditable as they flow. Strict financial regulations demand timely data oversight [3], and non-compliance can incur steep fines, so firms use real-time pipelines to stay compliant. For example, one study found that adopting real-time data pipelines cut fraud losses by 60% [4], illustrating the benefit of catching issues instantly. By enforcing quality checks and compliance rules on-the-fly, financial organizations can innovate with live data (algorithmic trades, digital payments) without sacrificing control or transparency.

Healthcare

In healthcare, streaming patient data enables immediate clinical decisions – but any bad data can be life-threatening or violate privacy laws. Real-time governance is needed to validate and protect data as it’s collected, ensuring accuracy and confidentiality. Otherwise, errors can harm patients or trigger penalties [5]; with strong governance, providers can use live data (e.g. early warning alerts) while staying compliant with strict regulations [1].

Public Sector

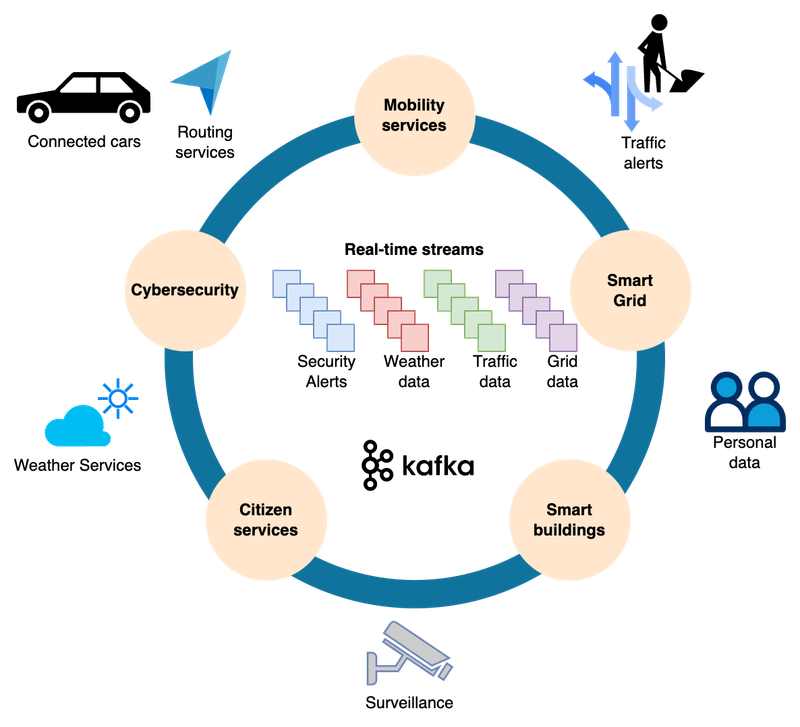

Government agencies are also embracing real-time data – from smart city sensors to emergency response systems. These applications demand data that is dependable and secure as events unfold. [6]

Figure 1: Event streaming in a smart city integrates live data from domains like transportation, utilities, and public safety via a central platform. Real-time data governance ensures these streams remain trustworthy and secure by enforcing schemas and access controls across city systems. Governance policies (e.g., schema enforcement and encryption) safeguard data as it flows.

Public sector data often involves personal information, so robust governance is crucial to maintain public trust. One example is Norway’s welfare department, which streams citizens’ life events for instant services – possible only with rigorous end-to-end governance. With proper controls on access and encryption, agencies can improve services via real-time feeds while upholding privacy and ethical standards.

Technological Enablers of Real-Time Data Governance

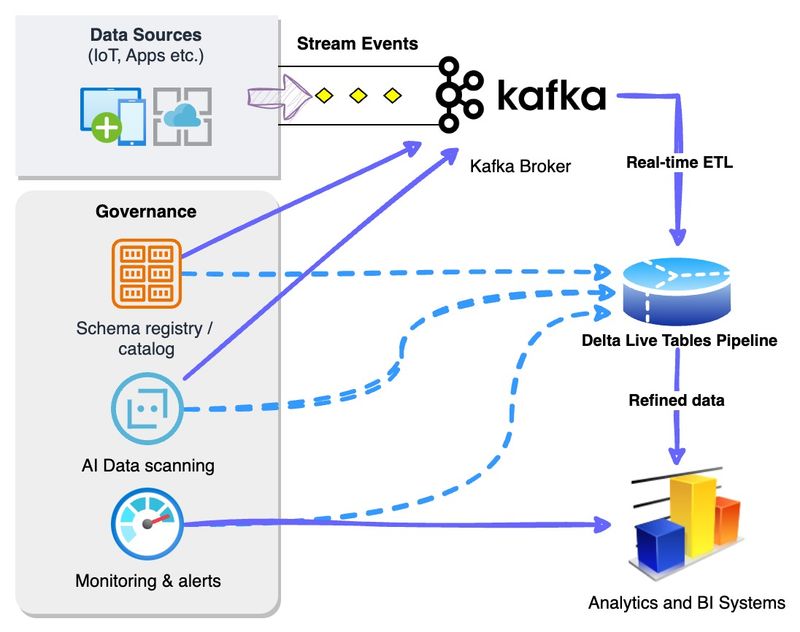

Modern data architectures and tools make real-time governance attainable. Key enablers include streaming platforms like Apache Kafka, pipeline frameworks like Databricks Delta Live Tables, and emerging AI-driven governance solutions. These technologies work together so that data “in motion” can be managed with the same rigor as data at rest.

Apache Kafka

Apache Kafka is a foundational platform for streaming data, acting as a high-throughput messaging backbone. It decouples data producers and consumers, enabling continuous data flow with low latency. For governance, Kafka’s ecosystem provides tools such as the Schema Registry, which enforces data schemas on Kafka topics. By validating each message against an expected schema, it maintains data quality and consistency in real time [7]. Schema Registry also helps manage schema evolution, providing a form of lineage for streams. Additionally, Kafka supports encryption, authentication, and access controls to protect data in transit. Together, these ensure that only well-formed, authorized events propagate through the pipeline, so downstream analytics receive trustworthy data.

Databricks Delta Live Tables

Databricks Delta Live Tables (DLT) simplifies building reliable streaming pipelines and embeds governance into data processing. With DLT, engineers declaratively define transformations, and the platform handles continuous execution and scaling. Crucially, DLT has built-in qualitycontrols: users can specify data quality rules, and if incoming data violates those rules (e.g. a null value in a critical field), DLT will automatically flag or quarantine that record [8]. This prevents bad data from silently contaminating analyses. DLT also generates audit logs and data lineage information by default, providing transparency into how each stream is transformed. DLT’s automation of validation, error handling, and documentation reduces manual oversight and builds trust in real-time pipeline results.

AI-Driven Governance Tools

As streaming data scales up, AI-driven tools are increasingly important to govern it. Machine learning can automatically detect patterns or anomalies that humans might miss. For instance, AI models can scan data streams to identify sensitive personal data and then mask or redact it on the fly [3] – enforcing privacy policies in real time. AI can also learn what “normal” data looks like and flag unusual deviations (potential errors or security incidents) as they happen. Some platforms deploy AI agents that classify and tag data in motion and apply rules dynamically. These AI-driven solutions significantly improve security and compliance without human intervention. In practice, as data flows through a pipeline, AI might automatically stop a credit card number from leaking or alert teams to an abnormal surge in activity – all in real time.

Figure 2: A simplified real-time pipeline to demonstrate how technologies work together

This schematic shows raw data flowing from sources into Kafka, processed through a Delta Live Tables pipeline, and then feeding analytics systems. Meanwhile, governance tools continuously enforce schemas, check data quality and privacy, and monitor/audit the process in real time.

Regulatory Compliance and Security Considerations

Fast-moving data is still subject to strict privacy and security rules. Regulations like the EU’sGDPR and California’s CCPA impose requirements on how personal data is handled. Likewise, industry standards such as HIPAA (healthcare) and PCI DSS (finance) mandate strong protections for sensitive information. Real-time data governance must enforce these requirements on streaming data by applying measures like encryption for data in flight, fine-grained access controls on streaming platforms, and continuous audit logging of data use. By enforcing access controls, encryption, and real-time auditing on data streams, organizations can prevent unauthorized exposure of data and avoid regulatory penalties. Governance frameworks often include real-time threat detection as well, so any unusual access pattern or anomaly in the stream triggers an instant alert and response [8]. In essence, baking robust security and compliance checks into each step of a streaming pipeline ensures that even as data moves rapidly, it remains protected under the required policies and regulations.

Challenges and Future Outlook

Implementing real-time data governance comes with challenges. One major hurdle is the sheer volume and velocity of data streams – systems must handle an ongoing firehose of information with minimal delay [10]. This can strain infrastructure and leave little time for batch-style data cleansing. Another challenge is the complexity of managing distributed streaming architectures spanning many components. Ensuring a unified governance approach (consistent metadata, lineage, and access controls) across numerous platforms is non-trivial. Additionally, real-time solutions demand significant computing resources and specialized skills, so organizations must weigh the benefits against the costs and effort involved [11].

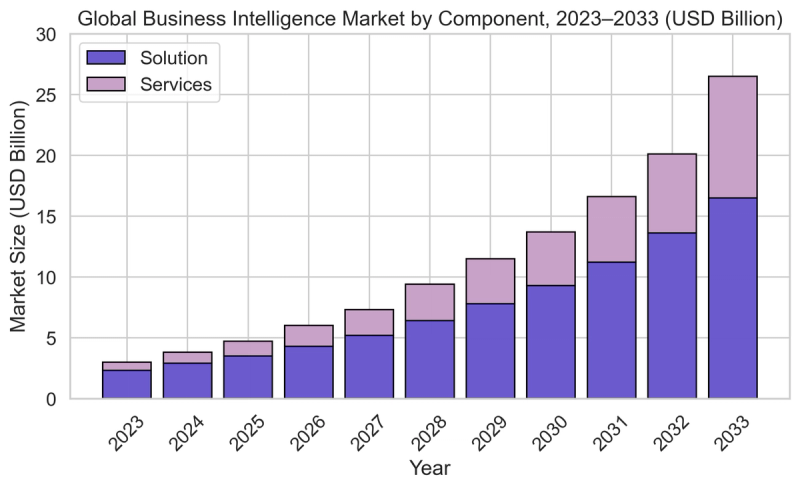

Despite these challenges, data governance is increasingly moving toward real-time by default. We can expect more automation and intelligence in governance processes, with AI playing a growing role in managing metadata, detecting anomalies, and enforcing policies automatically. Real-time governance is also quickly becoming a standard expectation. Adoption numbers bear this out: an analysis predicts that 70% of organizations will be using real-time analytics by 2025, up from 40% in 2020 [12]. We can illustrate this trajectory with the following visualization:

Figure 3: Global Business Intelligence market growth

This rapid rise in real-time analytics adoption shows that businesses are no longer willing to wait for data. Investing in robust real-time data governance will not only ensure compliance but also offer a competitive edge. Organizations that fully invest in enterprise data governance have been found to outperform their peers by over 20% on key business metrics [12] – a compelling incentive to get governance right.

Conclusion

Real-time data governance has become a core requirement in today’s fast-paced world. It enables organizations in finance, healthcare, and government to harness streaming data for immediate insights without compromising compliance, security, or accuracy. By deploying the right tools and practices, enterprises ensure that speed does not trump privacy or data integrity. This leads to better decisions made in the moment, fewer data mishaps, and greater confidence from regulators and customers. In an era of continuous data generation and consumption, those who govern their data streams effectively gain a significant advantage – they can act on information faster and with greater assurance. Real-time data governance is thus not just about avoiding problems, but about enabling agile, responsible data-driven operations for the future.

References:

[1] Ovais Naseem. “Real-Time Data Governance: Ensuring Quality and Compliance in Data Warehouses.” Digital CxO (Sept. 12, 2024) https://digitalcxo.com/article/real-time-data-governance-ensuring-quality-and-compliance-in-data-warehouses

[2] IDC White Paper (Seagate). “The Digitization of the World – From Edge to Core.” (Nov. 2018) https://www.seagate.com/files/www-content/our-story/trends/files/dataage-idc-report-final.pdf

[3] “Real-Time Regulatory Reporting: Streamlining Compliance in Financial Institutions.” Striim Blog (2023) https://www.striim.com/blog/real-time-regulatory-reporting/

[4] Dion Keeton. “How Real-Time Data Pipelines Drive Financial Insights in Fintech.” Meroxa Blog (Feb. 18, 2025) https://meroxa.com/blog/how-real-time-data-pipelines-drive-financial-insights-in-fintech

[5] Arcadia. “The consequences of poor data governance in healthcare.” (2023) https://arcadia.io/resources/data-governance-in-healthcare

[6] Kai Waehner. “Event Streaming with Kafka as Foundation for a Smart City.” (Feb. 14, 2021) https://www.kai-waehner.de/blog/2021/02/14/apache-kafka-smart-city-public-sector-use-cases-architectures-edge-hybrid-cloud

[7] Confluent. “Schema Registry for Apache Kafka – Documentation.” (2023) https://docs.confluent.io/platform/current/schema-registry/index.html

[8] Tredence. “Enhancing Data Governance with Databricks Delta Live Tables.” (2022) ([ Databricks Data Governance: Live Tables https://www.tredence.com/blog/enhancing-data-governance-capabilities-with-databricks

[9] Striim. “Reinventing Data Governance for the AI Era.” (2023) https://www.striim.com/blog/reinventing-data-governance-for-the-ai-era

[10] Secoda. “Why is data quality important in real-time data processing?” (Sept. 16, 2024) https://www.dataversity.net/10-data-streaming-challenges-enterprises-face-today

[11] Exclaimer. “Top 8 Data Governance Trends for 2025.” (Sept. 19, 2024) https://exclaimer.com/blog/top-8-data-governance-trends-for-2025

[12] Business Intelligence Statistics (2025). Market.us (2023) https://scoop.market.us/business-intelligence-statistics

About the author

Nishchai Jayanna Manjula is a seasoned Senior Specialist Solutions Architect with nearly 15 years of experience in consultancy and advisory roles, specializing in data analytics, AI/ML, and cloud computing. He holds a bachelor’s degree in Computer Science and is a Fellow at IETE. Known for his expertise in modernizing data warehouses, data governence, and data security for GenAI, Nishchai collaborates with organizations to unlock the full potential of their data. He actively contributes thought leadership through blogs, journals and public speaking on platforms like re:Invent. Passionate about innovation, Nishchai advises a start-up company on product development and provides strategic guidance to businesses, including those in the Financial sector, Automotic and Manufacturing to solve complex data challenges. Connect with Nishchai on LinkedIn.

At The Edge Review, we believe that groundbreaking ideas deserve a global platform. Through our multidisciplinary trade publication and journal, our mission is to amplify the voices of exceptional professionals and researchers, creating pathways for recognition and impact in an increasingly connected world.

Member%20--%3e%3c!DOCTYPE%20svg%20PUBLIC%20'-//W3C//DTD%20SVG%201.1//EN'%20'http://www.w3.org/Graphics/SVG/1.1/DTD/svg11.dtd'%3e%3csvg%20version='1.1'%20id='Layer_1'%20xmlns='http://www.w3.org/2000/svg'%20xmlns:xlink='http://www.w3.org/1999/xlink'%20x='0px'%20y='0px'%20viewBox='0%200%20200%2067.9'%20style='enable-background:new%200%200%20200%2067.9;'%20xml:space='preserve'%3e%3cstyle%20type='text/css'%3e%20.st0{fill:%234F5858;}%20.st1{fill:%233EB1C8;}%20.st2{fill:%23D8D2C4;}%20.st3{fill:%23FFC72C;}%20.st4{fill:%23EF3340;}%20%3c/style%3e%3cg%3e%3cg%3e%3cg%3e%3cg%3e%3cpath%20class='st0'%20d='M76.1,37.5c-0.4-2.6-2.9-4.6-5.8-4.6c-5.2,0-7.2,4.4-7.2,9.1c0,4.4,2,8.9,7.2,8.9c3.6,0,5.6-2.4,6-5.9H82%20c-0.6,6.6-5.1,10.8-11.6,10.8c-8.2,0-13-6.1-13-13.7c0-7.9,4.8-14,13-14c5.8,0,10.7,3.4,11.4,9.5H76.1z'/%3e%3cpath%20class='st0'%20d='M84,35.9h5v3.6h0.1c1-2.4,3.6-4.1,6.1-4.1c0.4,0,0.8,0.1,1.1,0.2v4.9c-0.5-0.1-1.3-0.2-1.9-0.2%20c-3.9,0-5.2,2.8-5.2,6.1v8.6H84V35.9z'/%3e%3cpath%20class='st0'%20d='M106.4,35.4c6,0,9.9,4,9.9,10.1c0,6.1-3.9,10.1-9.9,10.1c-6,0-9.9-4-9.9-10.1%20C96.5,39.4,100.4,35.4,106.4,35.4z%20M106.4,51.6c3.6,0,4.7-3.1,4.7-6.1c0-3.1-1.1-6.1-4.7-6.1c-3.6,0-4.6,3.1-4.6,6.1%20C101.8,48.6,102.8,51.6,106.4,51.6z'/%3e%3cpath%20class='st0'%20d='M122.4,48.9c0,2.3,2,3.2,4,3.2c1.5,0,3.4-0.6,3.4-2.4c0-1.6-2.2-2.1-6-3c-3-0.7-6.1-1.7-6.1-5.1%20c0-4.9,4.2-6.1,8.3-6.1c4.2,0,8,1.4,8.4,6.1h-5c-0.1-2-1.7-2.6-3.6-2.6c-1.2,0-2.9,0.2-2.9,1.8c0,1.9,3,2.1,6,2.9%20c3.1,0.7,6.1,1.8,6.1,5.4c0,5-4.4,6.7-8.7,6.7c-4.4,0-8.8-1.7-9-6.7H122.4z'/%3e%3cpath%20class='st0'%20d='M141.6,48.9c0,2.3,2,3.2,4,3.2c1.5,0,3.4-0.6,3.4-2.4c0-1.6-2.2-2.1-6-3c-3-0.7-6.1-1.7-6.1-5.1%20c0-4.9,4.2-6.1,8.3-6.1c4.2,0,8,1.4,8.4,6.1h-5c-0.1-2-1.7-2.6-3.6-2.6c-1.2,0-2.9,0.2-2.9,1.8c0,1.9,3,2.1,6,2.9%20c3.1,0.7,6.1,1.8,6.1,5.4c0,5-4.4,6.7-8.7,6.7c-4.4,0-8.8-1.7-9-6.7H141.6z'/%3e%3cpath%20class='st0'%20d='M156.1,35.9h5v3.6h0.1c1-2.4,3.6-4.1,6.1-4.1c0.4,0,0.8,0.1,1.1,0.2v4.9c-0.5-0.1-1.3-0.2-1.9-0.2%20c-3.9,0-5.2,2.8-5.2,6.1v8.6h-5.3V35.9z'/%3e%3cpath%20class='st0'%20d='M174.2,46.8c0.1,3.3,1.8,4.9,4.7,4.9c2.1,0,3.8-1.3,4.1-2.5h4.6c-1.5,4.5-4.6,6.4-9,6.4%20c-6,0-9.8-4.1-9.8-10.1c0-5.7,4-10.1,9.8-10.1c6.5,0,9.7,5.5,9.3,11.4H174.2z%20M182.7,43.5c-0.5-2.7-1.6-4.1-4.2-4.1%20c-3.3,0-4.3,2.6-4.4,4.1H182.7z'/%3e%3cpath%20class='st0'%20d='M190.9,39.5h-3.1v-3.5h3.1v-1.5c0-3.4,2.1-5.8,6.4-5.8c0.9,0,1.9,0.1,2.8,0.1v3.9c-0.6-0.1-1.3-0.1-1.9-0.1%20c-1.4,0-2,0.6-2,2.2v1.1h3.6v3.5h-3.6v15.6h-5.3V39.5z'/%3e%3c/g%3e%3c/g%3e%3c/g%3e%3c/g%3e%3cpolygon%20class='st1'%20points='0,67.9%200,47.4%2016.8,41.9%2046.6,52.1%20'/%3e%3cpolygon%20class='st2'%20points='29.8,26.1%200,36.4%2016.8,41.9%2046.6,31.7%20'/%3e%3cpolygon%20class='st0'%20points='16.8,41.9%2046.6,31.7%2046.6,52.1%20'/%3e%3cpolygon%20class='st3'%20points='46.6,0.2%2046.6,20.6%2029.8,26.1%200,15.9%20'/%3e%3cpolygon%20class='st4'%20points='29.8,26.1%200,36.4%200,15.9%20'/%3e%3c/svg%3e)

Important Links

Contact Info

info@theedgereview.org

Address:

14781 Pomerado Rd #370, Poway, CA 92064